All the theories and x-risk, but there was a very interesting morsal from the reporting. Bloomberg reported Microsoft middle manager Sam Altman has been fundraising in the Middle East for a new AI chip company called Tigris.

If that doesn’t demonstrate the geopolitical power shift nothing will and;

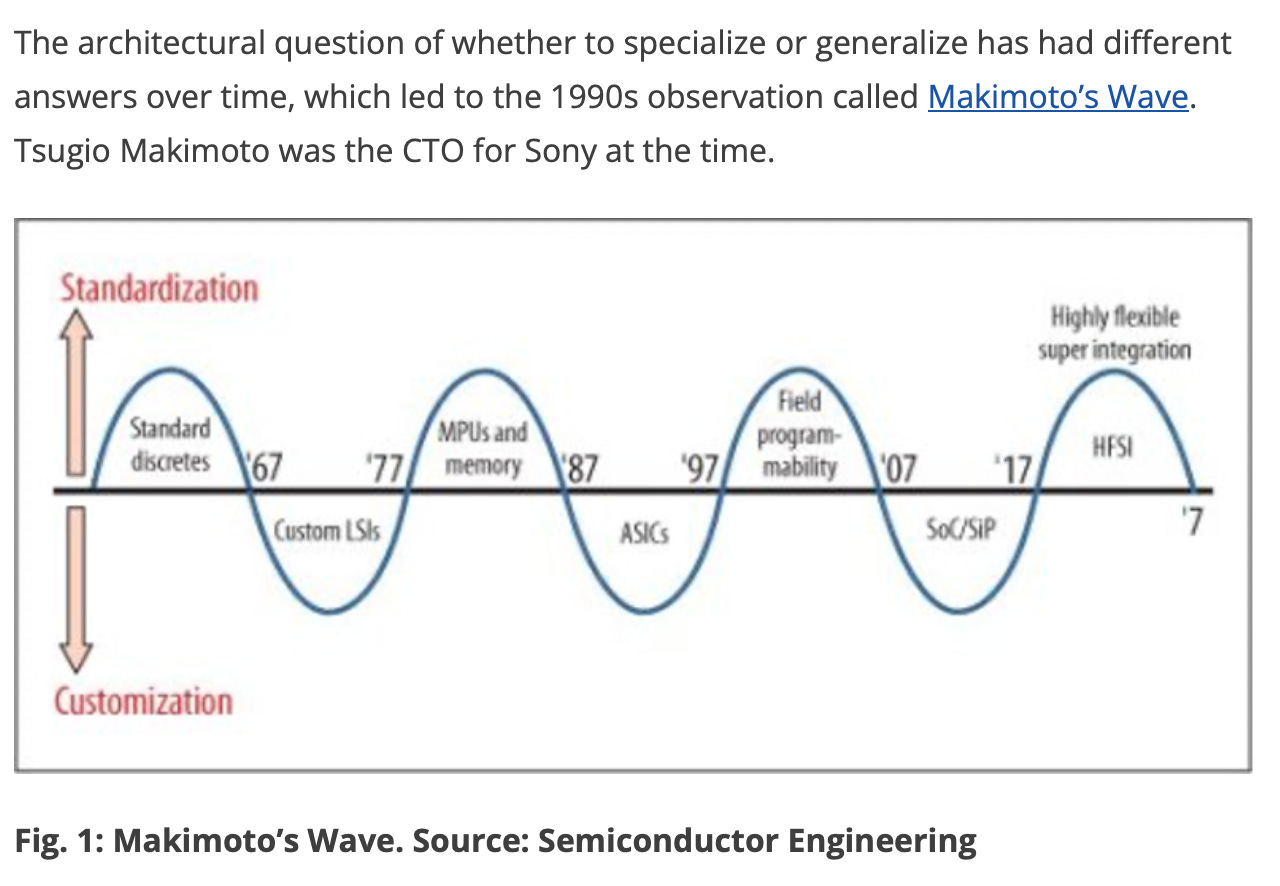

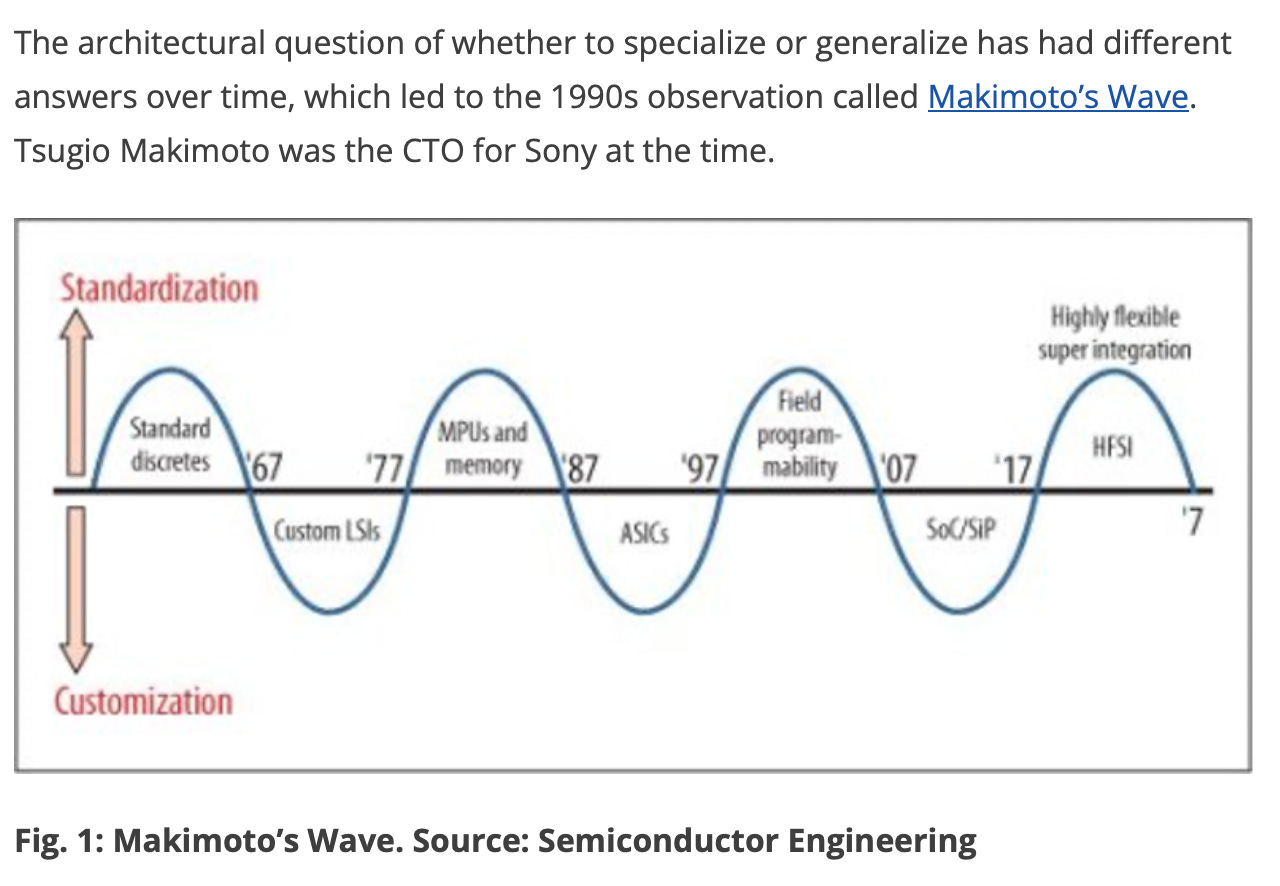

yeah, when opex is in the billions, there has never been a stronger incentive for hardware innovation. Sam knows it and hundreds of startups around the world know it. yes the first wave of AI hardware startups died/struggled, but THIS TIME IT’S DIFFERENT. I was reminded of the Makimoto Wave:

The argument behind the framework was “The decade beginning in 2017 will differ from the current one because, for many applications, chip integration density will be too high for the customized approach.” This sort of played out through chiplets and advanced packaging techniques like chip-on-wafer-on-silicon (CoWoS) and Wafer Level Fan-Out (WLFO). But it’s 2023 and the massive shift to customised AI silicon sort of breaks the framework half way through the suggested timeline. Cost is and will increasingly become the bottleneck for AI progress (in training but certainly in terrms of serving customers). There are loads of ways to reduce costs, the easiest is to make the models smaller by sacrificing some accuracy. But the only way to reduce costs by 100x and make AI “too cheap to meter” is custom silicon.

If the reports are correct, Sam’s vision is to own the AI stack from the Jonny Ive-designed consumer device down through the app store, OS, and the Tigris chip. The Apple smartphone playbook for AI.

Ownership of the entire AI stack is now the Amazon/Anthropic, Google/Deepmind, Microsoft (sans OpenAI?) goal. It’s always been my view that eventually all foundational model companies will need their own hardware because they can’t afford to be at the mercy of Nvidia. An interesting question for the group: can a foundational model company—InflectionAI, Cohere, Aleph Alpha, Mistral, etc—survive without building their own hardware?

Speaking of Nvidia, I wrote something for this quarter’s management letter (become an LP if you want early access. not investment advice). This essay comes from the fact, it annoyed me that everyone said there was a GPU crunch. When technically that wasn’t correct. (I am great fun a dinner parties. Jk, I don’t go to dinner parties, I have children). And Elad’s view that even Nvidia are underestimating the impact of AI. Let me know why I’m wrong in the comments.

👋 Lawrence

There never was a GPU crunch

Since the explosion of LLMs, the semiconductor supply chain has raced to produce enough chips to meet orders. In May 2023, Sam Altman, CEO of OpenAI, said: “We’re so short on GPUs, the less people that use the tool, the better.” Capability and context windows have been throttled throughout 2023 dampening demand. Today, Nvidia say the H100 will remain sold out until Q1 of next year. Recent reports from Korea suggest High Bandwidth Memory (HBM), the specialised DRAM used in AI accelerators, is sold out until 2025. Saudi Arabia bought 3,000 A100s, Baidu, ByteDance, Tencent, and Alibaba bought $1 billion worth of Nvidia chips this year and ordered another $4 billion worth for 2024. Every country, hyperscaler, and wannabe genAI leader has placed as large an order as Nvidia will accept. An AI startup founder jokes, “Investing in shares in Nvidia would be more lucrative than pursuing my startup.”

The dramatic increase in demand led to shortages and higher prices for the best AI chips, such as Nvidia’s H100. This wasn’t an issue in producing enough logic chips; the GPU ASICs at the heart of the A/H100s. In Q2, TSMC’s 5nm cutting-edge node process used to make the H100s was at 88% capacity. Further capacity has been freed up as the smartphone market shrunk 9% last year, opening up even more 5nm capacity. And with an installed base of 6.4 billion devices and fewer new features, shipments will likely continue to fall, slowly freeing up even more capacity. For TSMC, the rise of AI couldn’t have come at a better time. GPUs were never and will never be the problem.

The problem is memory and the packaging that stacks the memory and logic together on a chip. The recent shortages are in high bandwidth memory (HBM3) production and TSMC’s 2.5D advanced packaging platform: chip-on-wafer-on-silicon (CoWoS). HBM is only produced in significant volumes by SK Hynix, with the other major DRAM providers, Samsung and Micron, ramping up production in 2024. For packaging, only TSMC’s cutting-edge chip-on-wafer-on-silicon (CoWoS) platform delivers the necessary throughput and density requirements for AI chips. Mark Liu, Chairman, TSMC, said of CoWoS: “It is not the shortage of AI chips. It is the shortage of our CoWoS capacity. […] Currently, we cannot fulfil 100% of our customers' needs, but we try to support about 80%. We think this is a temporary phenomenon. After our expansion of [advanced chip packaging capacity], it should be alleviated in one and a half years."

Pointing the industry at 50% annual growth

The HBM and CoWoS bottlenecks will ease throughout 2024 as HBM and CoWoS production capacity increases. Until the last few months, SK Hynix was the sole provider of the latest generation of HBM, HBM3, to Nvidia, a significant constraint on capacity and price. But Samsung recently entered the fray with HBM3e “Shinebolt”, and they and SK Hynix report they are already sold out of HBM3 memory until 2025. Micron, the last of the big three memory makers, is claiming the fastest HBM3 Gen2 currently sampling with partners and slated for high-volume production in early 2024. The DRAM market is notoriously cutthroat, and we can expect healthy competition on price as Micron, Samsung and SK Hynix compete for market share.

The other major bottleneck, advanced packaging, lacks the same competitive dynamics as the HBM market and, despite increasing demand, will likely continue to bottleneck capacity into 2025. Unlike HBM, there are no alternatives to TSMC or alternative packaging platforms for AI chips. Samsung and Ankor offer inferior alternatives regarding both yields and the ability to complete the end-to-end process. Some tools in alternative packaging platforms like Wafer Level Fan-Out (WLFO), used primarily for smartphone SoCs, can be repurposed for some CoWoS process steps. But these are inefficient workarounds. The reality is TSMC and CoWoS is the only game in town. In the medium term, it’s about building more factories. TSMC plans to establish a new advanced packaging fab in Taiwan and expects to invest nearly $3 billion in the project to come online sometime in 2027. The monthly output of CoWoS packages remains limited to around 100,000 units as of early 2023. Efforts are underway to raise this to ~150,000 units per month eventually, but progress has been slow. And every new AI chip released in 2024 and 2025 must go through CoWoS. Nvidia’s H100 and the H200 in 2025. AMDs Instinct MI300. As well as Broadcom/Google’s TPUs and Broadcom/Meta MTIA ASIC. As well as AIChip/AWS Trainium1/Inferentia2. And Microsoft with their Maia 100 accelerator, too.

HBM and CoWoS are likely to ramp to meet the industry forecast 50% YoY growth from $30 billion to over $240 billion in the next five years. HBM certainly, with a little more uncertainty about CoWoS. Expanding capacity costs billions, and more than most industries, the semiconductor industry is highly sensitive to demand forecasting. Unsurprisingly, Nvidia, AMD, and TSMC have converged on the 50% market growth number. This is the number all suppliers in the nearly trillion-dollar ecosystem are working towards. The significant risk is that even with the enormous purchases of chips and the c$55bn invested in AI start-ups in 2023, we don’t yet have a clear idea of the application space. The industry is buying chips ahead of demand. Nation states are buying billions of dollars of chips because of FOMO. Demand forecasting is always uncertain, but never more so than with AI demand, where there has been a step-change in capabilities with large language models, and use cases are still unclear. The rapid success of ChatGPT, reaching 180 million users in 9 months and making it the fastest-growing consumer product in history, heavily hints at broad-based demand. Never in the history of tech has so much money and supply been waiting for demand

What if the industry is under or overestimating demand?